Projects I have worked on

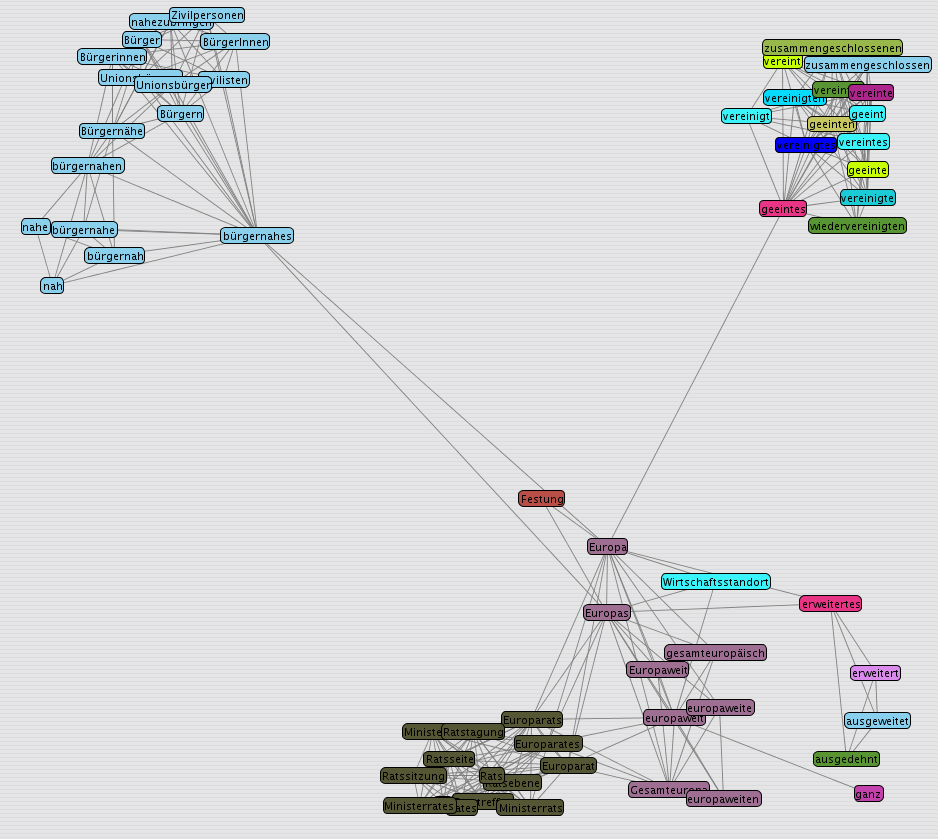

In mai 2007 I finished my Diploma Thesis "Automatische Erstellung von Wörterbüchern aus Paralleltexten - Ein sprachunabhängige Ansatz" which is available as pdf. Maybe you want to see some nice pictures concerning my diploma thesis (only monolingual part of it)?

In last time I have investigated natural language processing. As a result 2 projects have been created:

Poser

This tool allows you to analyse big mono and bilingual texts and produces wordlists with frequencies. Additional it generates 2 gram statistics( neighbour and sentence cooccurences).

features:

open source (GNU public license)

also works with big texts (f.e. fr-de europarl (180/177 MB text ; 1M sentences; 27/33M tokens; 290/120k types in less than 3h (800MhzPIII and 768MB RAM) generating alll statistics (word list, neighbour- sentence- and bilingual- cooccurences, sorting))

different filters for cooccurences (significance or frequency or all ;) )

fully supports unicode

runs on Windows Mac and Linux/Unix, (all is written in Java, includes platform depending starting scripts)

select output type (words or word numbers)

sort files

simple word lists as input possible

planned features:

different measures (coming soon)

memory improvements for huge word lists

speed improvements

support for XCES and multi word lists

other things TODO:

clean source code

clean output (using a logging API)

improve documentation

improve command line parameters (quite messy)

(Unix, Mac and Linuxer should choose the “tar.gz” to preserve the executable permissions. “src”- files include only source code, and “bin” only binaries)

ParText

This package includes the Poser and can be used to investigate parallel texts. A graphical user interface lets you search in bilingual text, shows a sentence in original and translation and tries to link the words with it's translation.

features:

easy to handle (only need one command with 2 textfiles as parameter to generate statistic, and a second command to show results) (OK everything is relative ;) )

includes poser

includes simple tool to extract parallel texts from open source bible, so you don't need a parallel text

powerful open source search engine LUCENE is used (search for conditions in both languages at the same time, ranked results (more than boolean), use wildcards)

runs on Windows Mac and Linux/Unix, (all is written in Java, includes platform depending starting scripts)

fully supports unicode

open source (GNU public license)

planned features

more easily use own text with existing statistics (also possible at the moment)

other things TODO:

remove some bugs (f.e. visual bug with arabic text, visual bug with legend)

documentation

because this program was my first SWING application the source is very ugly ;)